There has been a marked increase in consumer applications of Augmented Reality, primarily due to the recent release of Augmented Reality frameworks on popular mobile platforms, as well as the future potential of Hololens and MagicLeap. However, currently these applications are approached from the lens of apps and games. You have to switch between these apps and games, as they can be activated one at a time. They are still constrained by the architecture of their platforms.

Another approach to Augmented Reality could be to think about it as layers of digital feeds superimposed on the real world. A user would be subscribed to feeds from various source, and such a system would know what to render based on the location, orientation, and objects in its view. This is similar to how a web browser renders a page based on the URL, and its HTML, CSS and JavaScript.

My Role

This was a month long solo project as part of the SciFi Prototyping course. It was my first attempt at AR, and I learned a lot about its usability issues throughout my design iterations. I also had to overcome many roadblocks in implementing the prototype using an unstable version of ARjs and a failed attempt at using ARToolkit 6 through Unity. I wish I had a newer iPhone and had used ARKit.

Fall 2017, 4 weeks

Sketching

Prototyping

Full-stack Development

HRQR

AR.js

AR.js Marker Training

ThreeJS + WebGL

Flask + SQLite

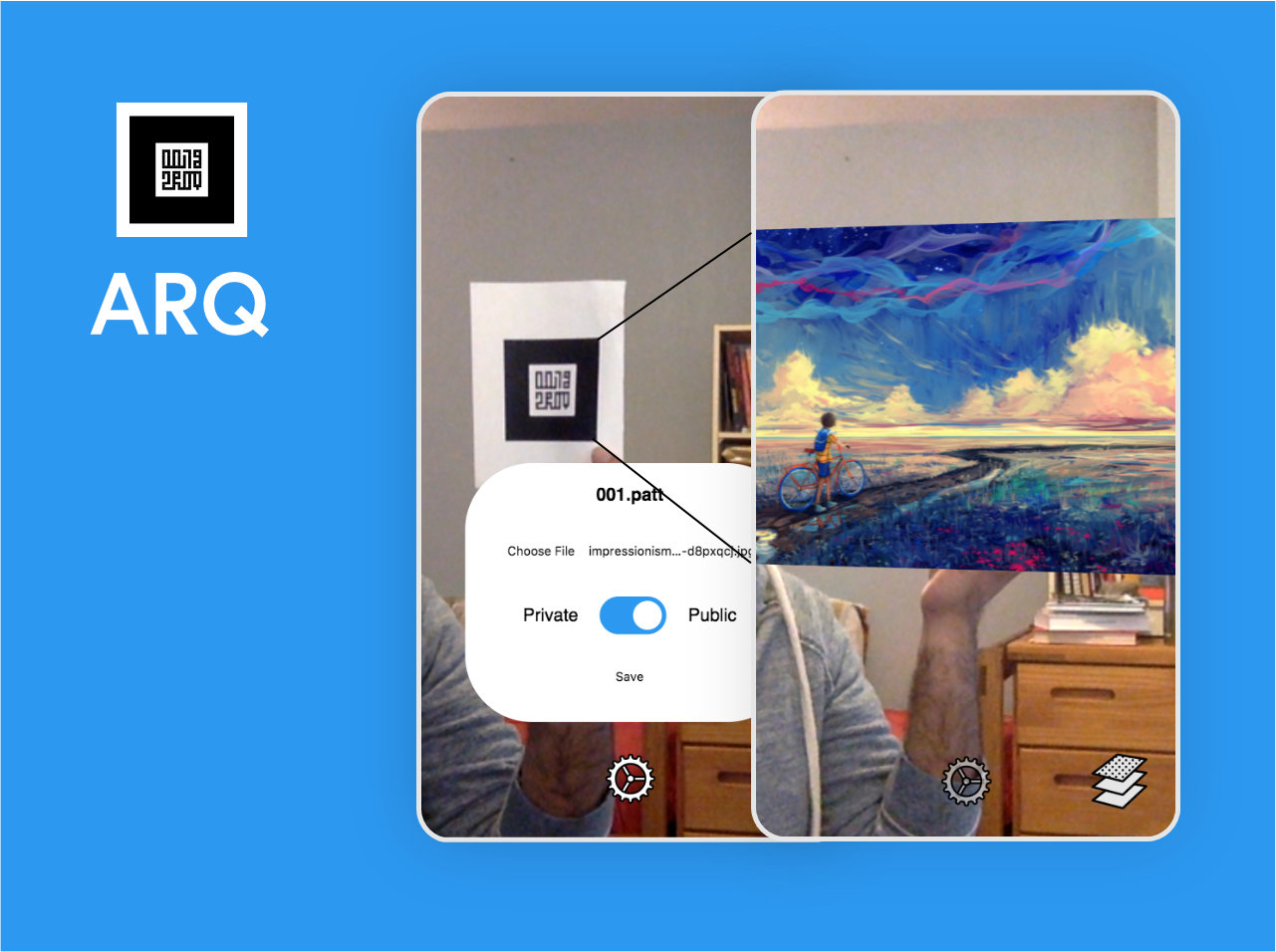

Prototype

The prototype web-application demonstrates public and private layers of images across multiple users.

When User A's phone detects a marker, and if no image is associated with that marker, the user can upload an image for that marker, and set it as public or private. Once uploaded, the image immediately appears over the marker. Private images are visible only to the owner, whereas public images get displayed for everyone using the system.

On detecting the same marker, User B's phone shows the public image uploaded by User A for that marker. User B can upload another image on the same marker, and set it is public or private. This image is displayed on top of the image uploaded by User A.

If both images are private, only the respective users will be able to see those images. But, if both upload a public image, the most recent image will be displayed on top.

Due to performance limitations of my phone, I could not implement a way to interact with and swipe through multiple images. To improve usability, I ensured that the image upload box remained visible when the user has already started interacting with it, even if the marker goes out of the camera's view.

Future Considerations

Similar to the internet, such an AR system would need to have its own standards and protocols, and a regulatory body that thinks and establishes them. It also raises many security and privacy concerns. Should you be able to see what others see in their AR vision? Do you have the right to block public AR feeds? How will we handle cross-device compatibility issues? Will we have differences in rendering, like we have with emojis, font-rendering, and implementation differences in HTML and CSS? Should you be able to display something over my face or body?

I think such a system has the potential to change the way we interact with reality. However, due to present limitations and large scope, I could only explore a tiny part of the system.